“Auto clustering of galaxies after dimensionality reduction”的版本间差异

跳到导航

跳到搜索

无编辑摘要 |

小无编辑摘要 |

||

| (未显示同一用户的22个中间版本) | |||

| 第3行: | 第3行: | ||

*This work is divided into two parts. |

*This work is divided into two parts. |

||

*The first part is to reduce the dimension of Galaxy data to low dimensional space with VAE. |

*The first part is to reduce the dimension of Galaxy data to low dimensional space with VAE. |

||

[[File:微信图片_20220304222539.png|500px|right|jumengting]] |

|||

==Datasets== |

==Datasets== |

||

*DECaLS |

|||

*In the first step, we first filter out the galaxy data with data shape [3*256*256], and save the galaxy data paths that match this shape into a text file, which constitutes our training set. As shown in the example of text in the figure below: |

*In the first step, we first filter out the galaxy data with data shape [3*256*256], and save the galaxy data paths that match this shape into a text file, which constitutes our training set. As shown in the example of text in the figure below: |

||

*From more than 300,000 data, 290613 galaxies data matching the shape conditions were selected. |

|||

[[File:微信图片_20220304222539.png|500px|center|jumengting]] |

|||

*From more than 300,000 data, 290613 galaxies data matching the shape conditions were selected. |

|||

== |

==VAE Method== |

||

*The neural network of VAE structure is constructed as follows: |

|||

===First Method=== |

|||

[[File: |

[[File:VAE_NN.png|800px|center|jumengting]] |

||

*The neural network structure used in the experiments to construct a neural network structure similar to the original text is shown in the following figure. |

|||

*Firstly, GalSim is used to generate information files of relevant galaxies based on random parameters. Because deep learning requires a large number of images and parameter samples, the cost of manual annotation of images is too high; and the expertise of the person conducting the annotation is required. Generating datasets with parameters is a more convenient experimental method. |

|||

*When generating galaxy data with GalSim software, the dimension size of the generated data is also set to random because the dimension size of the real galaxy data varies. The python library is used to generate 50,000 galaxy information, which is partitioned into a training set, a test set, and a validation set in the ratio of 8:1:1. In order to handle galaxy images of different sizes, this paper uses two-dimensional data with a standard size of 128*128 size, which will be different in shape from 128*128 size, cut the center of galaxy images larger than 128*128 pixels, and fill the edges of images smaller than 128*128 pixels. |

|||

===Second NN=== |

|||

*The second task fits the data to multiple parameters. |

|||

[[File:Nn_111.png|500px|right|jumengting]] |

|||

==Result== |

==Result== |

||

=== |

===Latent variable dimensional analysis=== |

||

*The number of latent space dimensions is set, and the neural network is used to perform gradient descent fitting to the appropriate case and observe the losses. The following figure represents the losses of different latent space dimensions corresponding to training 100 epochs: |

|||

*The above network fits the galaxy data to one parameter using a 2D convolutional neural network. The following are the training results for training a single parameter - star magnitude. |

|||

[[File: |

[[File:下载11.png|500px|center|jumengting]] |

||

*Evaluation of different latent variable dimensions in various categories of SSIM reconstructed values. |

|||

===Generate data Second Method=== |

|||

[[File:Ssim num1.jpg|500px|center|jumengting]] |

|||

*Galsim result. |

|||

*The above are the different representations in different latent spaces. |

|||

===Ground-Truth data Second Method=== |

|||

*The higher the dimensionality of the latent variable, the more information in the high-dimensional space it can represent, and the better the quality of the reconstructed image. |

|||

*CANDELS |

|||

*Therefore, considering the dimensionality of the latent variable and the quality of the reconstructed images in a balanced way, the experimental results with loss function of MSE and latent variable features in forty dimensions are selected for further analysis in this work. |

|||

*The above is the first stage. |

|||

===Latent variables and galaxy morphology=== |

|||

*Some reconstructed images. |

|||

[[File:Xiang1.jpg|500px|center|jumengting]] |

|||

*Latent Space Analysis. |

|||

[[File:All.jpg|500px|center|jumengting]] |

|||

*The effect of using random forest to classify hidden variables is good. The details will be published in the paper. |

|||

===Outlier Latent Variable=== |

|||

*We find 1308 outliers, accounting for 0.417% of the overall galaxy image. |

|||

[[File:Xiyou (1).jpg|500px|center|jumengting]] |

|||

*The outliers of the latent variable space are extracted to find rare morphological feature galaxy images and anomalous galaxy images. |

|||

==Domain Adaptation== |

|||

==Else== |

|||

===Data=== |

|||

Waiting... |

|||

* In this work, we take two different surveys in DESI as an example of transfer between DECaLS and BASS+MaLS overlapping sky region data. |

|||

[[File:Fig2.png|500px|center|jumengting]] |

|||

===Question=== |

|||

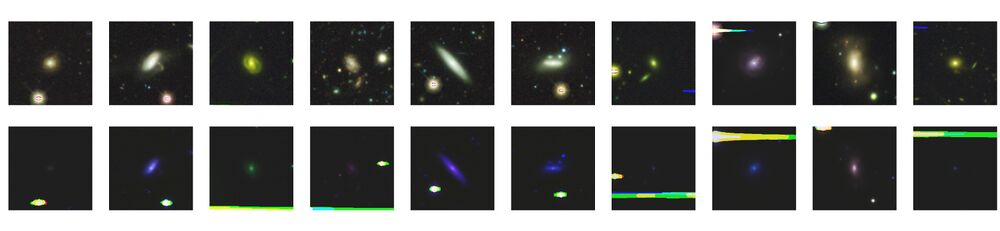

* Apply VAE to DECaLS and non-DECaLS to view the latent variable distance. |

|||

* Like. |

|||

[[File:In out like.jpg|1000px|center|jumengting]] |

|||

* DisLike. |

|||

[[File:In out dislike.jpg|1000px|center|jumengting]] |

|||

===Methods=== |

|||

(II) The neural network of VAE structure is constructed as follows: |

|||

*The Domain Adaptation of VAE: |

|||

VAE( |

|||

(encoder): Sequential( |

|||

===Results=== |

|||

(0): Sequential( |

|||

See in [https://doi.org/10.1093/mnras/stad3181 paper] |

|||

(0): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) |

|||

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

The address of the data is as follows: http://202.127.29.3/~shen/VAE/ |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

(1): Sequential( |

|||

(0): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) |

|||

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

(2): Sequential( |

|||

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) |

|||

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

(3): Sequential( |

|||

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) |

|||

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

(4): Sequential( |

|||

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)) |

|||

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

) |

|||

(fc_mu): Linear(in_features=32768, out_features=35, bias=True) |

|||

(fc_var): Linear(in_features=32768, out_features=35, bias=True) |

|||

(decoder_input): Linear(in_features=35, out_features=32768, bias=True) |

|||

(decoder): Sequential( |

|||

(0): Sequential( |

|||

(0): ConvTranspose2d(512, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), output_padding=(1, 1)) |

|||

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

(1): Sequential( |

|||

(0): ConvTranspose2d(256, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), output_padding=(1, 1)) |

|||

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

(2): Sequential( |

|||

(0): ConvTranspose2d(128, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), output_padding=(1, 1)) |

|||

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

(3): Sequential( |

|||

(0): ConvTranspose2d(64, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), output_padding=(1, 1)) |

|||

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

) |

|||

) |

|||

(final_layer): Sequential( |

|||

(0): ConvTranspose2d(32, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), output_padding=(1, 1)) |

|||

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) |

|||

(2): LeakyReLU(negative_slope=0.01) |

|||

(3): Conv2d(32, 3, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) |

|||

(4): Tanh() |

|||

) |

|||

) |

|||

(III) The number of latent space dimensions is set, and the neural network is used to perform gradient descent fitting to the appropriate case and observe the losses. The following figure represents the losses of different latent space dimensions corresponding to training 100 epochs: |

|||

[[File:下载11.png|500px|center]] |

|||

The following are the different representations in different latent spaces: |

|||

[[File:x123.png|500px|center]] |

|||

[[File:x124.png|500px|center]] |

|||

[[File:x125.png|500px|center]] |

|||

[[File:x126.png|500px|center]] |

|||

[[File:x127.png|500px|center]] |

|||

[[File:x128.png|500px|center]] |

|||

The higher the dimensionality of the latent variable, the more information in the high-dimensional space it can represent, and the better the quality of the reconstructed image. |

|||

The above is the first stage. |

|||

The address of the data is as follows: http://202.127.29.3/~shen/VAE/ |

|||

2024年11月21日 (四) 10:38的最新版本

Introduction

- This work is divided into two parts.

- The first part is to reduce the dimension of Galaxy data to low dimensional space with VAE.

Datasets

- DECaLS

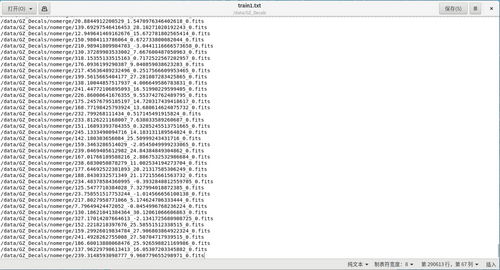

- In the first step, we first filter out the galaxy data with data shape [3*256*256], and save the galaxy data paths that match this shape into a text file, which constitutes our training set. As shown in the example of text in the figure below:

- From more than 300,000 data, 290613 galaxies data matching the shape conditions were selected.

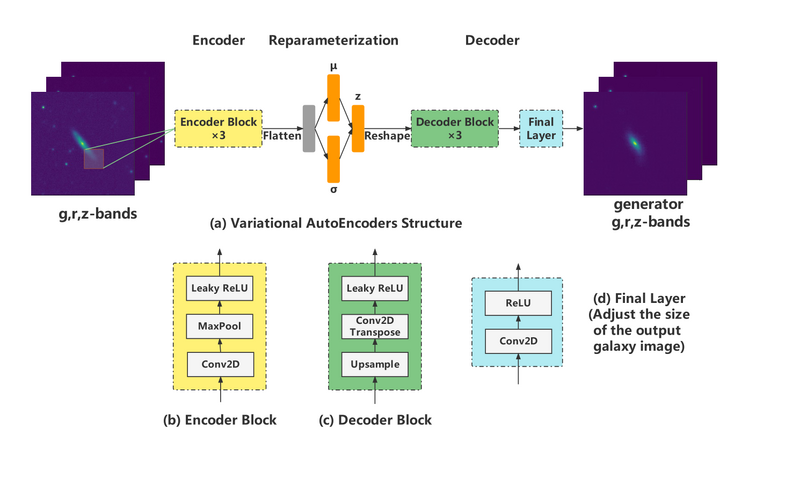

VAE Method

- The neural network of VAE structure is constructed as follows:

Result

Latent variable dimensional analysis

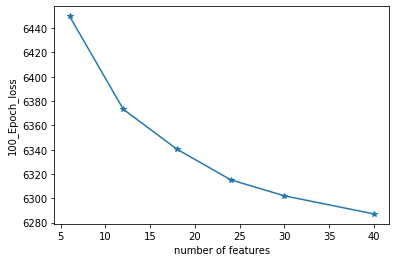

- The number of latent space dimensions is set, and the neural network is used to perform gradient descent fitting to the appropriate case and observe the losses. The following figure represents the losses of different latent space dimensions corresponding to training 100 epochs:

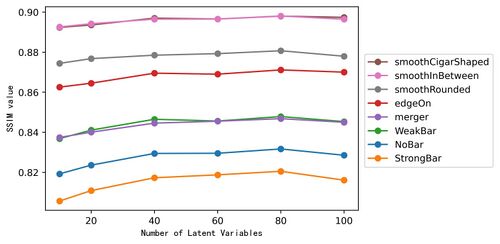

- Evaluation of different latent variable dimensions in various categories of SSIM reconstructed values.

- The above are the different representations in different latent spaces.

- The higher the dimensionality of the latent variable, the more information in the high-dimensional space it can represent, and the better the quality of the reconstructed image.

- Therefore, considering the dimensionality of the latent variable and the quality of the reconstructed images in a balanced way, the experimental results with loss function of MSE and latent variable features in forty dimensions are selected for further analysis in this work.

- The above is the first stage.

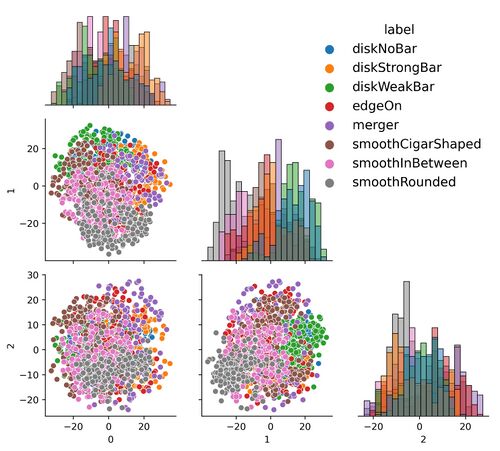

Latent variables and galaxy morphology

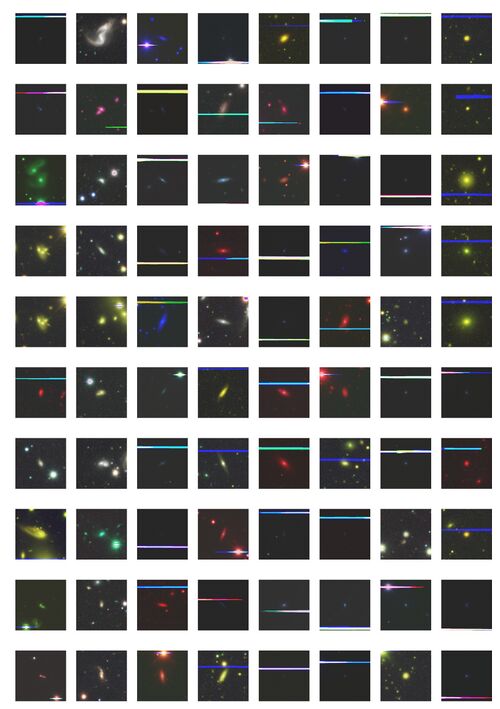

- Some reconstructed images.

- Latent Space Analysis.

- The effect of using random forest to classify hidden variables is good. The details will be published in the paper.

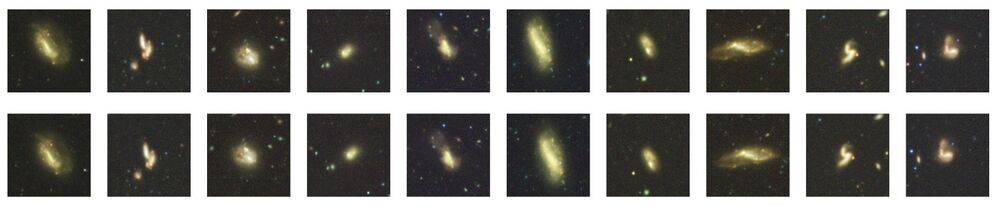

Outlier Latent Variable

- We find 1308 outliers, accounting for 0.417% of the overall galaxy image.

- The outliers of the latent variable space are extracted to find rare morphological feature galaxy images and anomalous galaxy images.

Domain Adaptation

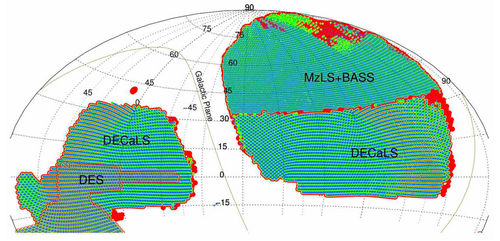

Data

- In this work, we take two different surveys in DESI as an example of transfer between DECaLS and BASS+MaLS overlapping sky region data.

Question

- Apply VAE to DECaLS and non-DECaLS to view the latent variable distance.

- Like.

- DisLike.

Methods

- The Domain Adaptation of VAE:

Results

See in paper

The address of the data is as follows: http://202.127.29.3/~shen/VAE/